Building Caddy – an AI support tool for adviser teams

At SCVO’s session on Putting People and Values at the Heart of AI, Stuart Pearson shared an overview of the Caddy adviser support system from Citizen’s Advice.

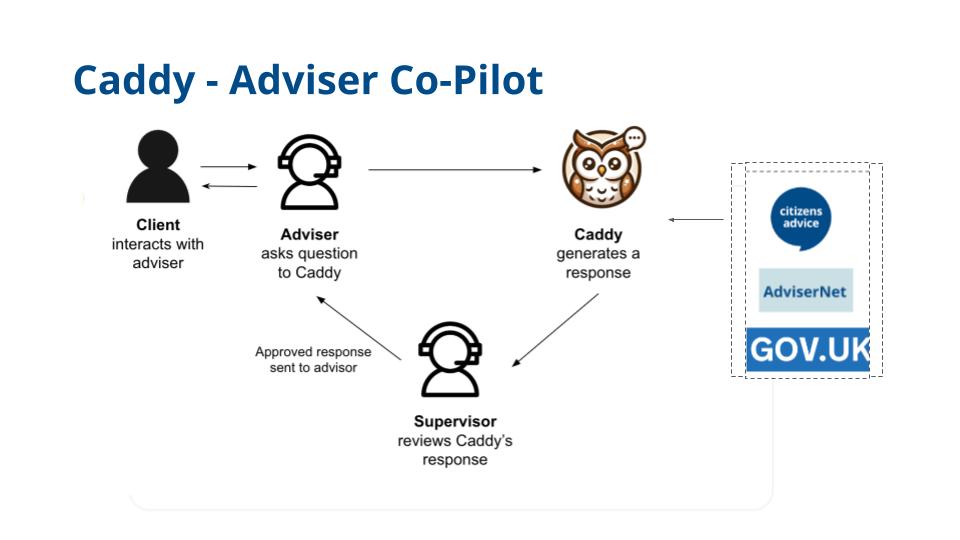

Caddy is a Generative AI LLM powered bot, built by Citizens Advice in Greater Manchester. The system is designed to help advice teams provide answers to clients more quickly, build adviser confidence and reduce demands on supervisors.

Caddy was designed to amplify the vital human element of the Citizens Advice service, ensuring clients always speak with real advisers; the system maintains a 'human-in-the-loop' approach, with supervisors reviewing all draft responses before they reach advisers, using only trusted sources. It's built to support advisers, not replace them. Since 2024, Citizens Advice and the I.AI team have been collaborating to develop a scalable version of Caddy for wider use by other advice providers, all while preserving this human-centric model.

Overview of Caddy Adviser support process

Here are audience questions from the session, with responses from Stuart.

Are you using the answers from Caddy to upskill your advisers to minimise the reliance on the AI LLM?

While we're not directly using Caddy's responses for adviser upskilling at this moment, it's a key element in our strategic roadmap. We're actively leveraging Caddy's interaction data through a dedicated dashboard that tracks user inquiries and the subject areas covered. This allows us to build a comprehensive picture of each adviser's knowledge strengths and, more importantly, their potential knowledge gaps

Can you tell us what platform was used to build Caddy? More widely - what are the most ethical models / platforms to use to build a chatbot/agent?

Caddy is currently using Anthropic Claude as the LLM that powers the responses. Claude uses a ‘Constitutional AI’ technique, which aims to produce AI systems aligned with human values and goals from inception. However, it's essential to remember that ethical AI is an ongoing process, and continuous evaluation and improvement are crucial.

Will development of Caddy be funded long-term and remain open source? Could be useful for organisations that also have a helpline?

The future development and funding of Caddy is a live topic currently. It has not received any direct funding and has been a collaborative approach with people giving up time. As it was built with a local charity, scaled with the national Citizens Advice and supported by the Cabinet Office. As it moves into production from pilot, a more robust model would be required. Citizen’s Advice plan a national roll out of Caddy in 2025. The project is all open sourced and so available for other organisations to use.

Does the inclusion of a supervisor to filter AI-generated responses in the Caddy system represent an unnecessary step, and would resources be better utilized on staff training?

While staff training is essential, the supervisor role in Caddy is not an unnecessary step; it's a crucial safeguard that prioritises human oversight in the advice-giving process, mitigating the risks of AI-generated errors and ensuring people remain central to service delivery, especially in areas where accuracy is paramount, and while future automation for consistently correct responses is planned, the current human-in-the-loop approach is vital for maintaining reliability and user trust

How does Caddy work with OISC accreditation?

Caddy aligns with OISC accreditation requirements by ensuring that it does not directly provide advice; instead, all AI-generated responses are subject to validation by a human supervisor. This supervisor, operating within the organisation's OISC accreditation, ensures that the advice provided meets the necessary standards, maintaining compliance.

How long did it take to develop Caddy?

Caddy's development spanned approximately 12 months for the current robust version, following an initial prototype that was built and deployed in around four weeks. This extended timeframe is primarily attributed to the project's volunteer-driven nature, supported by the Cabinet Office, which allows for a focus of roughly two days per week, which has impacted on the overall development pace

How easy is it to restrict a LLM to trusted sources like Caddy does? Does this require expensive development?

Restricting an LLM to trusted sources, as Caddy does, is achieved through Retrieval Augmented Generation (RAG), a methodology that's relatively straightforward and cost-effective to implement; RAG enhances LLM accuracy by allowing it to retrieve and incorporate information from specific, trusted data sources, making it a common and accessible practice rather than requiring expensive, bespoke development.